Blog Date: August 11, 2022

NSX-ALB Controller version: 22.1.1

vSphere version 7.0.3 Build 20150588

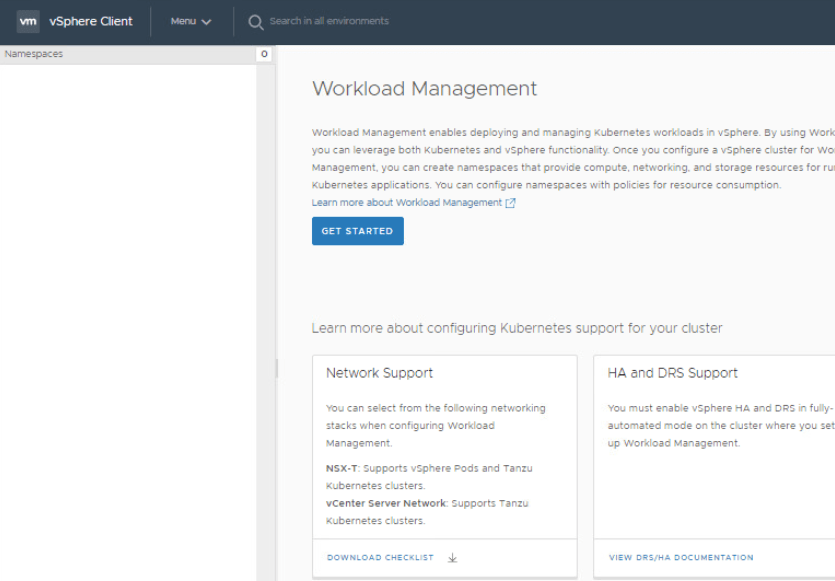

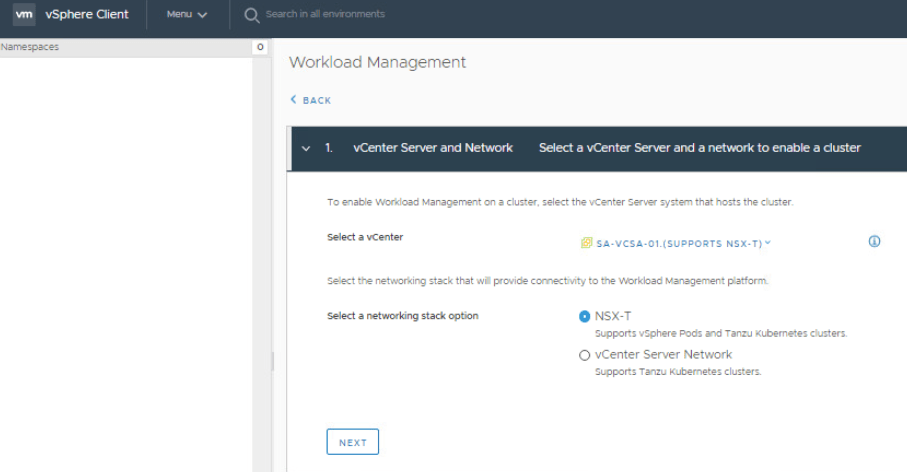

In my previous blog: vSphere with Tanzu: NSX-ALB Controller Requirements and Deployment Prep. I went over the basic requirements and prep work for the NSX-ALB controller to use with Tanzu. In this blog, I’ll demonstrate deploying the NSX-ALB into my home lab. In this blog, I will be doing the basic NSX-ALB controller in my lab with no NSX-T.

NOTE: Production deployments should use NSX-ALB controller cluster. In my lab however, I am only deploying a single controller for this example.

NSX-ALB Controller Deployment

Obtain the NSX-ALB controller by following the VMware KB Article 82049. In this example I am using version 22.1.1

Import the Controller OVA into the vCenter.

OVA Import Step 3: The controller would need to be deployed on a Compute Cluster that has access to the networks configured for this deployment. Click NEXT.

OVA Import Step 5: Select storage that the desired Compute Cluster has access to.

OVA Import Step 6: Select the management network distributed port group in the excel we filled in earlier, and click NEXT.

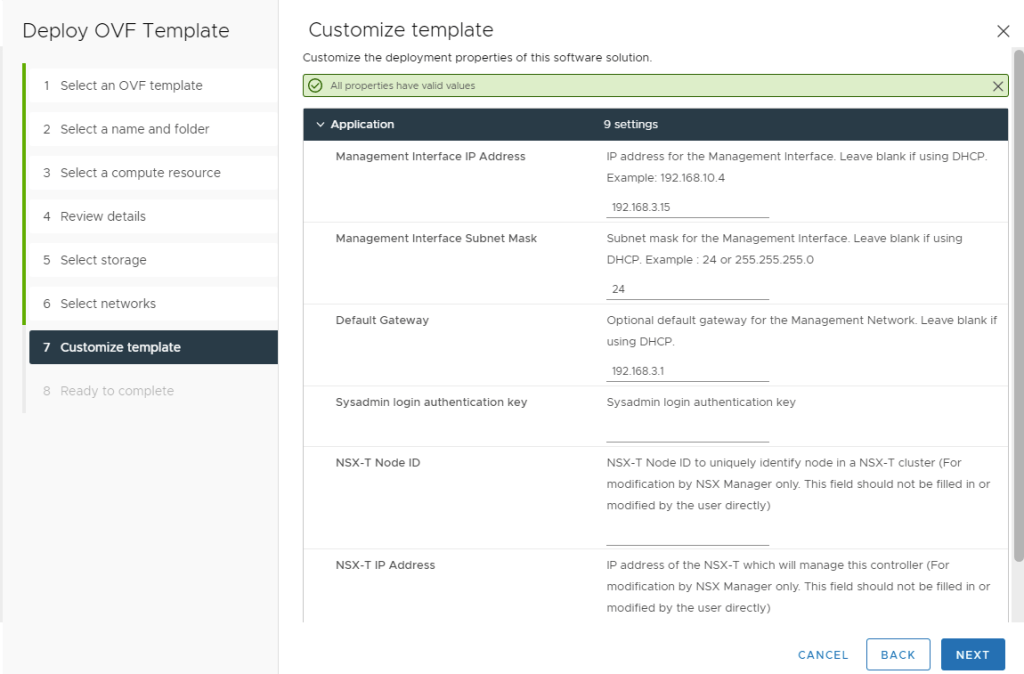

OVA Import Step 7: fill out the networking information for the NSX-ALB controller. Here we just need to add the controller IP, subnet, and gateway. Click NEXT.

OVA Import Step 8: Check for accuracy, and then click FINISH to start the deployment.

Once the NSX ALB OVA is deployed, start the VM. Wait for the VM to fully boot, and then access the web interface.

On the first login, you will need to create the admin password. Click CREATE ACCOUNT

After the admin account is created, you’ll need to add the DNS resolver(s) and DNS Search Domain. Click NEXT.

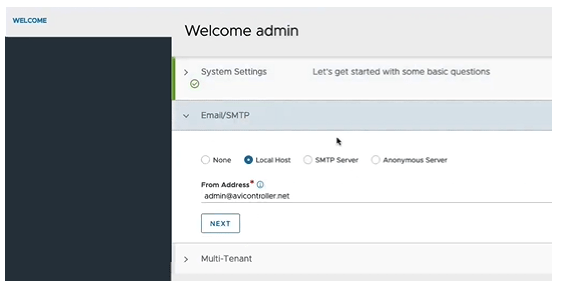

Add the “from” email address of your choosing. For this example, I am just using Local Host. Click NEXT.

For the multi-Tenancy, the defaults can be used unless otherwise specified. Toggle the check mark in the “Setup Cloud After” in the lower right because we want to configure the cloud component later, and click SAVE.

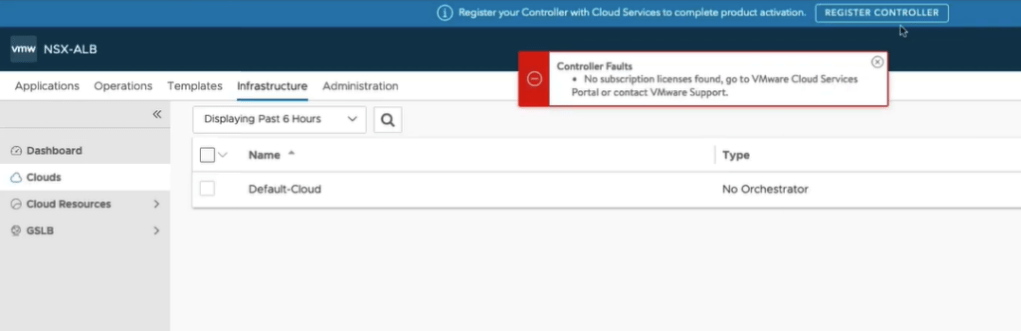

Now we are logged into the admin interface, and are immediately receive a controller faults error message that it doesn’t have license/subscription information, so we need to add it.

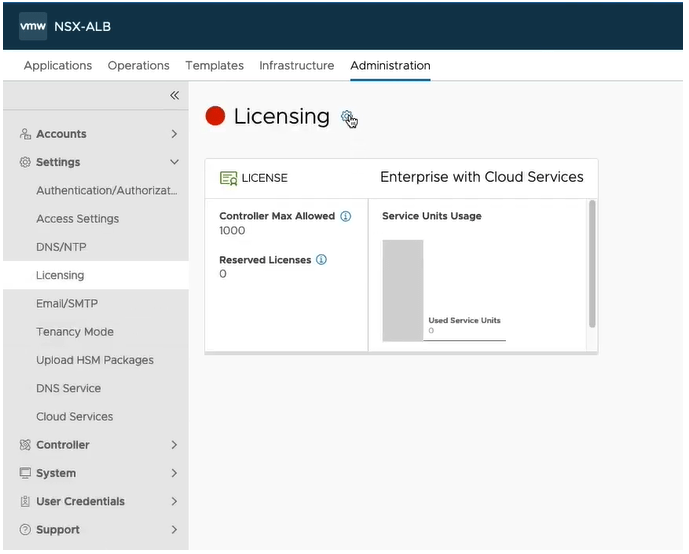

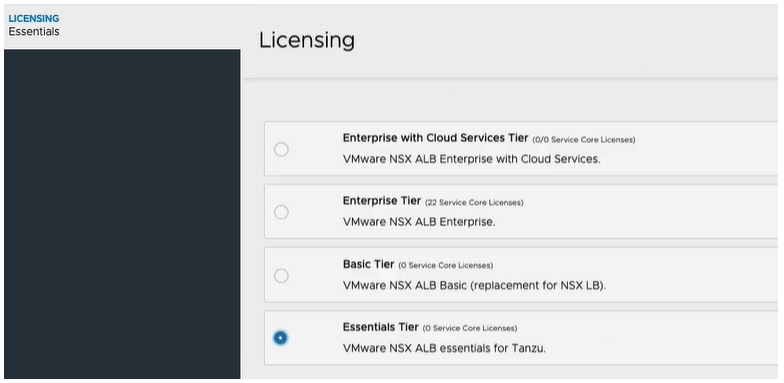

Click the Administration tab, and then on the left, expand Settings, and click Licensing. Click the gear.

Select the Essentials Tier for Tanzu license. Click SAVE.

You can see the interface has changed, and it wants us to add a license key, however we are in the essentials mode, and can only use essentials features. We do not need to change anything.

That covers the basic deployment for the NSX-ALB controller. In my next blog, I will walk through the process of assigning a signed certificate from my Microsoft CA to the controller. I will also show how to create and assign the self-signed certificate to the controller. Stay tuned.

You must be logged in to post a comment.